How I Built My Own WASM Cloud with the Fermyon Platform

In this post, I describe my journey in setting up my own WASM cloud. Specifically, how to provision the infrastructure alongside the Fermyon Stack to the Hetzner Cloud. Finally, I end up compiling a Go app to WASM and deploying it to my own cloud.

In this post, I describe my journey in setting up my own WASM cloud.

I start by briefly mentioning the differences between the Fermyon Platform (self-hosted cloud) and the Fermyon Cloud (their freemium, managed service). You'll learn how to use Terraform to provision the infrastructure alongside the Fermyon Stack (Nomad, Consul, and other services) to the Hetzner Cloud for less than 10EUR/month. Finally, you'll see how I created a simple HTTP server in Go using the Spin framework, compiled it to WASM using TinyGo, and deployed it to my own cloud.

This is something I did for fun and to learn more about the open-source Fermyon Platform in a couple of days, so bear in mind this is not a fully qualified or fault-tolerant production setup.

What's the Fermyon Platform?

The Fermyon Platform is an open-source, self-hosted cloud application platform for WebAssembly microservices. It allows you to host Spin applications and other compatible WebAssembly workloads.

The Fermyon Stack

How does Fermyon manage to deploy WASM apps to the self-hosted cloud platform?

Fermyon runs on the HashiCorp stack:

- Nomad: to schedule and orchestrate workloads across servers.

- Consul: to route traffic to your Spin applications.

Also, it relies on other open-source software components:

- Bindle: to package and distribute Spin applications.

- Traefik: the reverse proxy/load balancer.

- Hippo: the web UI for managing Spin-based applications.

What's the difference with Fermyon Cloud?

The Fermyon Cloud is an expansion of the Fermyon Platform and thus it is a bit more limited in terms of features and design.

In this tweet, I asked the CEO of Fermyon, Matt Butcher, if there're future plans for the Fermyon Platform to deliver feature-parity and design in comparison with Fermyon Cloud. In his answer, Matt reckons the platform is currently lagging behind in some features and is uncertain about the direction in which it will go, and whether it will ever catch up with its cloud offering.

To give an example, the Fermyon Platform UI is visually less appealing compared to the Fermyon Cloud, persisting data locally with the KV store is not supported, and some of the dependencies such as the Spin version are quite old (v0.6.0 vs v1.3.0 as of the time of this writing).

The Fermyon installer

The Fermyon installer is the open-source project that constitutes the Fermyon Platform. It is composed of several Terraform files and scripts to bring up the infrastructure and the HashiCorp stack.

The Fermyon installer supports multiple well-known clouds such as AWS, Azure, and GCP among many others. However, there was one specific cloud that was missing: Hetzner Cloud. And, given how fan I am about it, I couldn't miss the opportunity to open a PR to support Hetzner as a cloud provider for the Fermyon Platform.

Why Hetzner Cloud?

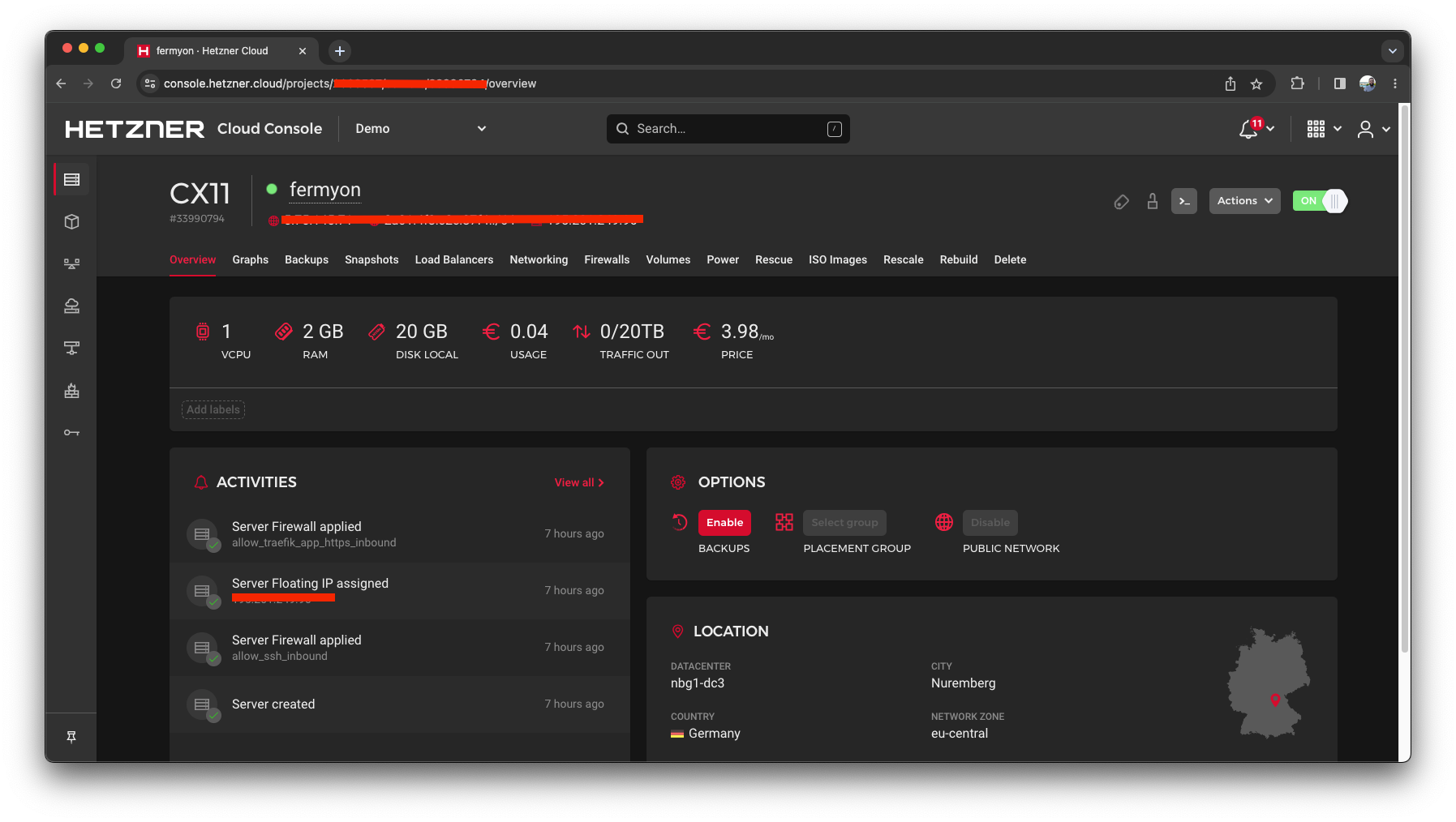

Using Hetzner can be very convenient to experiment with the Fermyon Platform as, at the time of writing, it's very cheap compared to other cloud providers: 8.22 EUR/month:

- 3.98 EUR/month for the cheapest server (1vCPU, 2GB RAM)

- 0.61 EUR/month for the server IP v4 address

- 3.63 EUR/month for the floating IP address

If you want to set your WASM cloud on Hetzner, feel free to use my referral link. You'll receive 20 EUR in cloud credits as soon as you sign up.

Deploying the infrastructure

The Hetzner Quick-start uses Terraform to deploy a lightweight, working example of Fermyon on Hetzner, using only a minimal array of resources needed to run the services.

First, you need to generate an API token with read and write permissions. The quick-start will create the following resources in the provided Hetzner account:

- 1 Server

- Name:${var.server_name}(default:fermyon)

- Type:${var.server_type}(default:cx11) - 1 Floating IP address (associated with instance): this is useful as it won't change with instance reboots and is a known value for constructing Hippo and Bindle URLs.

- 1 Custom firewall: Inbound connections allowed for ports 22, 80, and 443. See

var.allowed_inbound_cidr_blocksfor allowed origin IP addresses. All outbound connections are allowed. - 1 SSH keypair: see

var.allowed_ssh_cidr_blocksfor allowed origin IP addresses.

First of all, let's clone the installer:

git clone https://github.com/fermyon/installer.gitNavigate to the hetzner/terraform/single-node directory and initialize Terraform:

terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/tls...

- Finding latest version of hashicorp/random...

...

...

Terraform has been successfully initialized!Next, let's configure through Terraform variables some aspects of the infrastructure, such as enabling Let's Encrypt to provision SSL certificates not only for the Hippo and Bindle services but also for the WASM apps that we'll be deploying to our cloud. Also, I want to use my custom domain cloud.felipecruz.es to, later on, refer to the apps with a fancy name.

terraform apply \

-var='hcloud_token=nIzK...' \

-var='enable_letsencrypt=true' \

-var='dns_host=cloud.felipecruz.es'After a few minutes, all the infrastructure resources will be successfully deployed and the Fermyon Stack will be up and running.

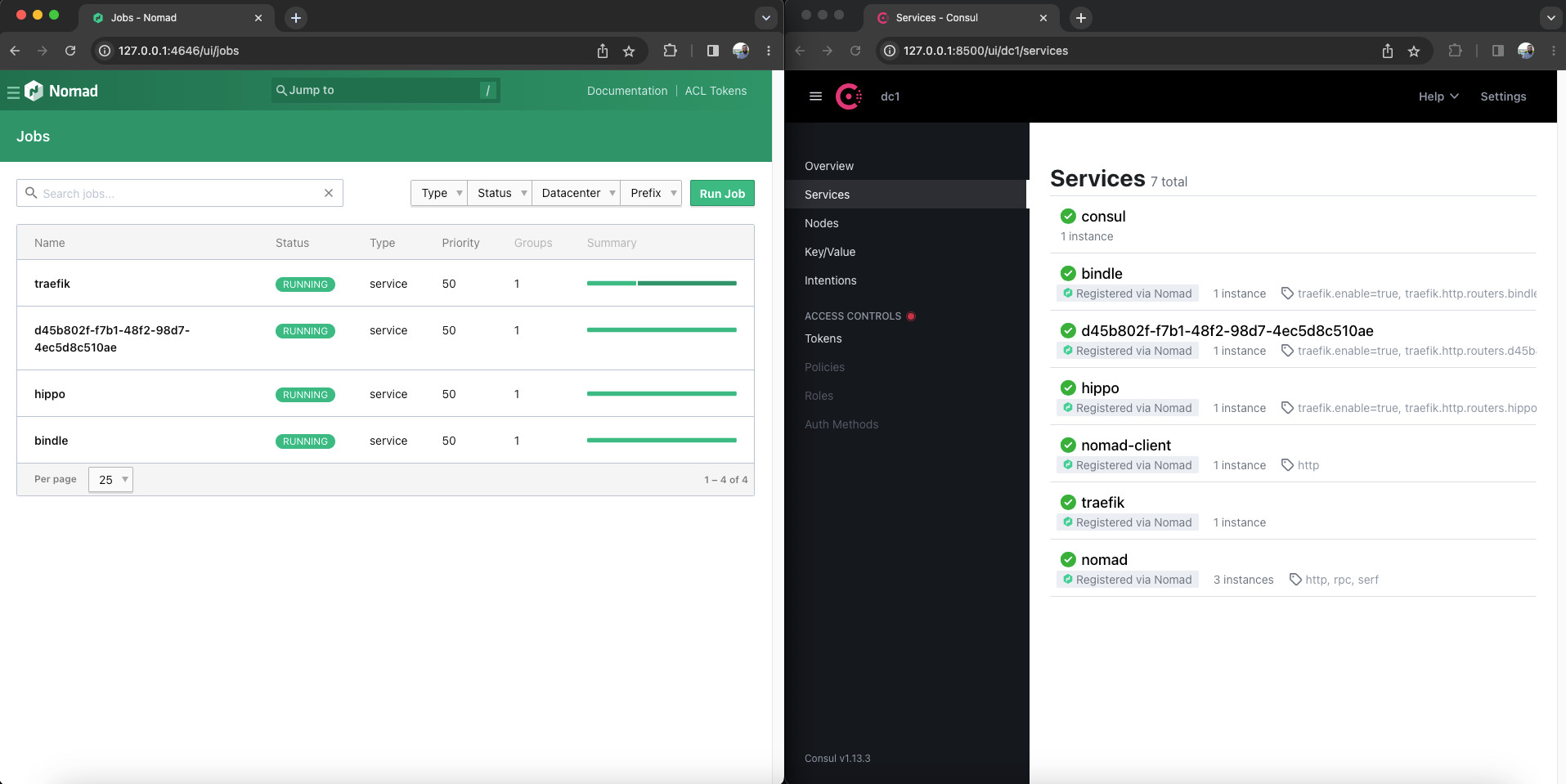

Accessing Nomad and Consul (optional)

You may wish to access the Nomad and Consul services from outside of the Hetzner instance. These services are not exposed to the public Internet so we can open an SSH tunnel to access them.

Nomad is configured to run on port 4646 and Consul on 8500. The following command sets up the local SSH tunnel and will run until stopped:

ssh -i hetzner_ssh_key \

-L 4646:127.0.0.1:4646 \

-L 8500:127.0.0.1:8500 \

-N root@$(terraform output -raw public_ip_address)You should now be able to interact with these services, for example by navigating in your browser to the Nomad dashboard at http://127.0.0.1:4646 or http://127.0.0.1:8500 for the Consul dashboard.

(Additional ports may be added, for instance, 8200 for Vault, 8081 for Traefik, etc.)

Alternatively, the ports can be opened up at the Hetzner firewall level. Note, however, that these currently run on unsecured http ports, therefore it is highly encouraged to minimally update the terraform deployment to restrict inbound IP addresses (var.allowed_inbound_cidr_blocks). Otherwise, The entire Internet will have access to the Nomad and Consul instances.

Building a WASM app with Spin

Spin is a framework for building and running event-driven microservice apps with WASM. The quickest way to start a new app is to install and use a Spin template for your preferred language:

spin templates install --git https://github.com/fermyon/spin

Copying remote template source

Installing template redis-rust...

Installing template static-fileserver...

Installing template http-grain...

Installing template http-swift...

Installing template http-php...

Installing template http-c...

Installing template redirect...

Installing template http-rust...

Installing template http-go...

Installing template http-zig...

Installing template http-empty...

Installing template redis-go...

Installed 0 template(s)

Skipped 12 template(s)

+------------------------------------+

| Name Reason skipped |

+====================================+

| http-c Already exists |

| http-empty Already exists |

| http-go Already exists |

| http-grain Already exists |

| http-php Already exists |

| http-rust Already exists |

| http-swift Already exists |

| http-zig Already exists |

| redirect Already exists |

| redis-go Already exists |

| redis-rust Already exists |

| static-fileserver Already exists |

+------------------------------------+I'll be choosing the http-go template:

spin new http-go

Enter a name for your new application: http-go-app

Description: My HTTP Go app

HTTP base: /

HTTP path: /...It's just a simple HTTP handler that returns the string Hello Fermyon!:

package main

import (

"fmt"

"net/http"

spinhttp "github.com/fermyon/spin/sdk/go/http"

)

func init() {

spinhttp.Handle(func(w http.ResponseWriter, r *http.Request) {

w.Header().Set("Content-Type", "text/plain")

fmt.Fprintln(w, "Hello Fermyon!")

})

}

func main() {}So, how do we compile the Go code to WASM? The spin.toml file created as part of the scaffolding contains build instructions for the WASM component - see the last line.

spin_version = "1"

authors = ["felipecruz91 <***@***>"]

description = "My HTTP Go app"

name = "http-go-app"

trigger = { type = "http", base = "/" }

version = "0.1.0"

[[component]]

id = "http-go-app"

source = "main.wasm"

allowed_http_hosts = []

[component.trigger]

route = "/..."

[component.build]

command = "tinygo build -target=wasi -gc=leaking -no-debug -o main.wasm main.go"You'll need the TinyGo compiler, as the standard Go compiler does not yet support the WASI standard.

spin build

Executing the build command for component http-go-app: tinygo build -target=wasi -gc=leaking -no-debug -o main.wasm main.go

Successfully ran the build command for the Spin components.

ls -lah main.wasm

-rwxr-xr-x 1 felipecruz staff 209K Jun 21 10:35 main.wasmIf you wanted, you could run the app locally to make sure it works before deploying it to the cloud:

spin up

Serving http://127.0.0.1:3000

Available Routes:

http-go-app: http://127.0.0.1:3000 (wildcard)Deploying to my cloud

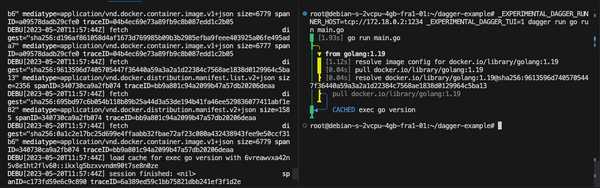

Once the infrastructure of the Fermyon Platform has been provisioned and the Go app is compiled to WASM, we can use Terraform to load the output values as environment variables in our terminal:

terraform output -raw environment

export DNS_DOMAIN=cloud.felipecruz.es

export HIPPO_URL=https://hippo.cloud.felipecruz.es

export HIPPO_USERNAME=admin

export HIPPO_PASSWORD="*********"

export BINDLE_URL=https://bindle.cloud.felipecruz.es/v1To log into our newly created Fermyon Platform, use spin login with the following flags to authenticate with the username and password that was provisioned:

spin login --url ${HIPPO_URL} --auth-method username --username ${HIPPO_USERNAME} --password ${HIPPO_PASSWORD} --bindle-server ${BINDLE_URL}Finally, the Go app can be deployed to the cloud:

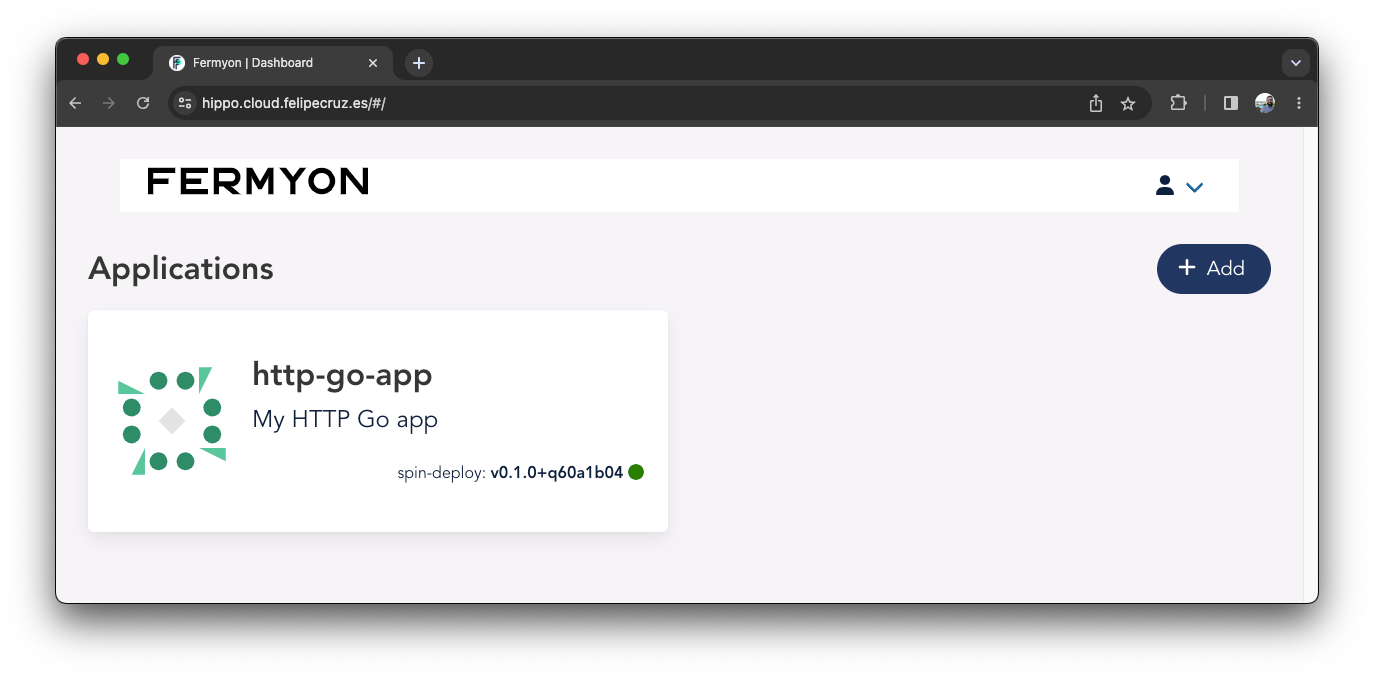

spin deploy

Uploading http-go-app version 0.1.0+q60a1b04...

Deployed http-go-app version 0.1.0+q60a1b04

Waiting for application to become ready...

Available Routes:

http-go-app: https://spin-deploy.http-go-app.hippo.cloud.felipecruz.es (wildcard)Note that the route follows the format https://<channel>.<app>.hippo.cloud.felipecruz.es

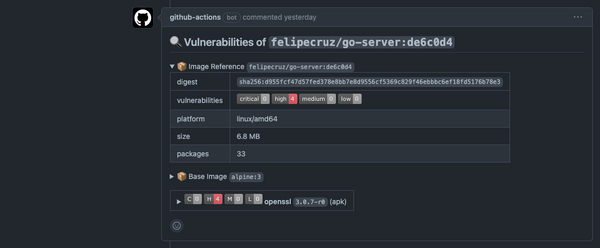

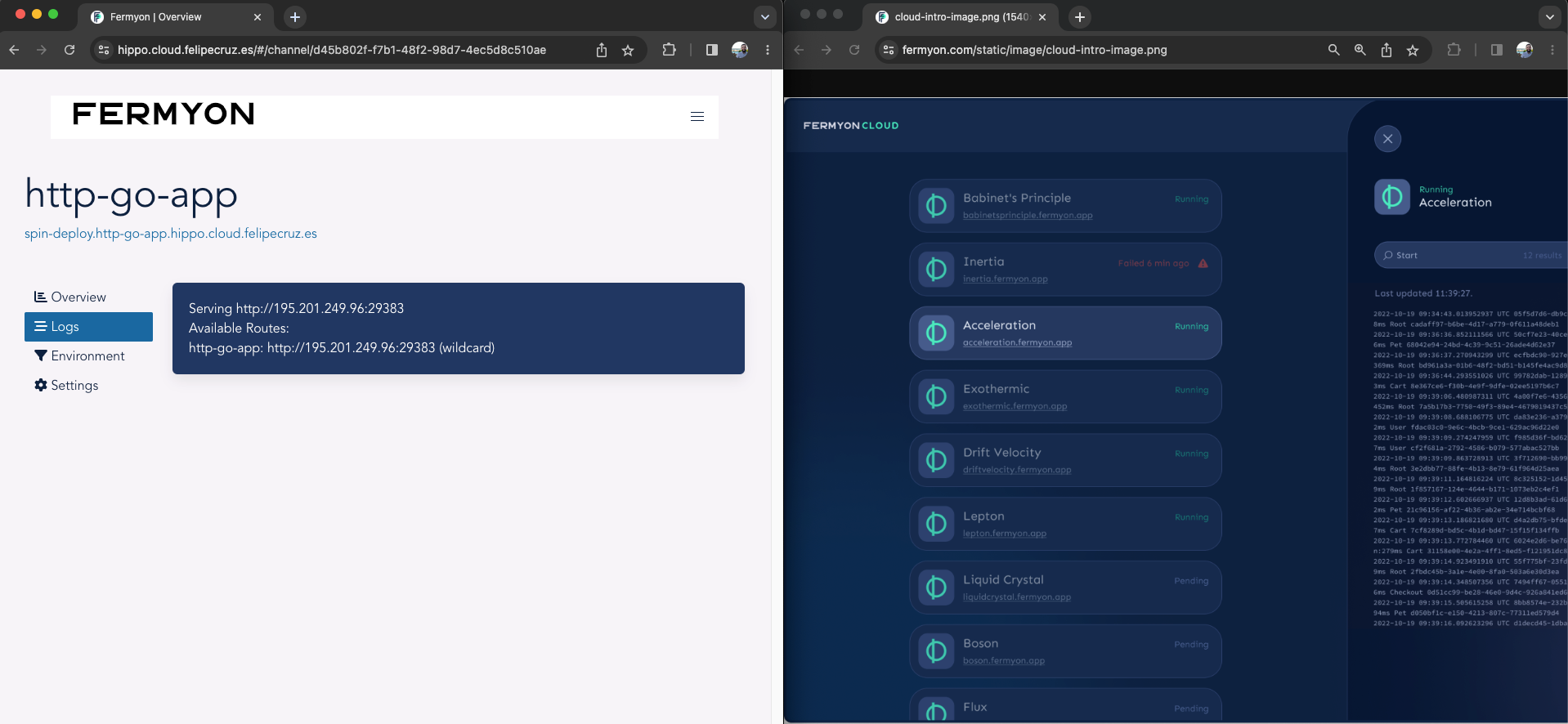

In Hippo - the web UI to manage Spin-based apps - we can find the recently deployed Go app:

🎉 We can reach the app running at https://spin-deploy.http-go-app.hippo.cloud.felipecruz.es

Bombarding the WASM app

Of course, the next natural step is to find out how well the recently deployed WASM app performs in my shiny new cloud. Bear in mind the server, hosted in Nuremberg (Germany), is the smallest and most affordable one that Hetzner offers: 1vCPU and 2GB RAM.

I used hey to launch 1 million requests using 50 workers concurrently. The WASM app was able to serve 100% of all the requests successfully. The average duration of a request was 82.1 ms. The total duration of the load test was around 27 minutes and the request rate was around 607 req/s.

hey -n 1000000 https://spin-deploy.http-go-app.hippo.cloud.felipecruz.es/

Summary:

Total: 1645.7398 secs

Slowest: 1.0870 secs

Fastest: 0.0448 secs

Average: 0.0821 secs

Requests/sec: 607.6295

Total data: 15000000 bytes

Size/request: 15 bytes

Response time histogram:

0.045 [1] |

0.149 [999068] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

0.253 [847] |

0.357 [34] |

0.462 [0] |

0.566 [0] |

0.670 [0] |

0.774 [0] |

0.879 [0] |

0.983 [0] |

1.087 [50] |

Latency distribution:

10% in 0.0677 secs

25% in 0.0718 secs

50% in 0.0793 secs

75% in 0.0904 secs

90% in 0.1053 secs

95% in 0.1139 secs

99% in 0.1282 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0000 secs, 0.0448 secs, 1.0870 secs

DNS-lookup: 0.0000 secs, 0.0000 secs, 0.0709 secs

req write: 0.0000 secs, 0.0000 secs, 0.0060 secs

resp wait: 0.0820 secs, 0.0447 secs, 0.8095 secs

resp read: 0.0001 secs, 0.0000 secs, 0.0078 secs

Status code distribution:

[200] 1000000 responsesConclusion

As someone who loves to experiment with new tech, building my own cloud to host WASM apps was an entertaining and valuable experience. I did learn a lot about the components that constitute the Fermyon Platform, how to provision the infrastructure with Terraform, and how to use the Spin framework to build and deploy a WASM app to my self-hosted cloud.

Also, I must admit that the most difficult part was to configure the DNS records in AWS Route 53 to get Traefik to serve the requests to the WASM app with TLS - something that I decided to skip in this post to keep it simple.

If you're looking for a managed solution, visit their Fermyon Cloud pricing page.

Finally, I'm curious to know in which direction start-ups and big enterprise companies that embrace WASM will start moving towards. Considering they are already deploying their regular apps as containers to Kubernetes-managed services in big cloud providers such as AWS or Azure, will they be willing to have an additional cloud just to deploy WASM workloads?

The overhead of having to manage multiple clouds could be something that some companies won't be happy about. I really like the idea of treating WASM apps similarly to containers, where these two different types of workloads can coexist in the same Kubernetes cluster, for instance. This way, you could configure the worker nodes of the cluster with different runtimes: runc for Linux containers and spin, wasmedge, wastime, etc for WASM.

Looking forward to seeing what the future brings!

Disclaimer: The content I have contributed to this blog post is my own and does not necessarily represent the views or opinions of my employer.